An Ordinary Tuesday in the Google and Samsung Environments: When a Korean Girl Meets Google. A Real Time Analogue of a Computer Game

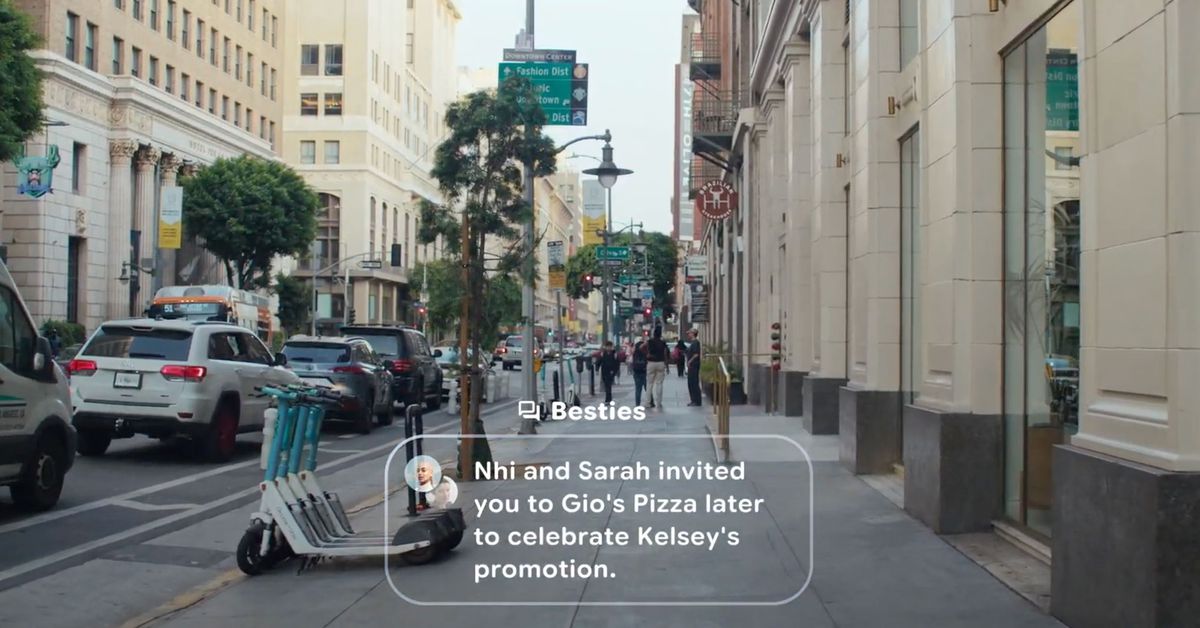

It’s an ordinary Tuesday. I’m wearing what look like ordinary glasses in a room surrounded by Google and Samsung representatives. One of the people steps out in front of me and speaks in Spanish. I do not speak Spanish. I could see her words being translated into English subtitles. Reading them, I can see she’s describing what I’m seeing in real time.

Moohan Smart Glasses, or I Saw Google’s Plan To Put Android on Your Face (The Verge: Google is Putting Android on your face)

It’s not too late for most developers to start getting the software and hardware they need to build for the new operating system, though it’s still early in the game. A device it is building withSamsung, dubbed Moohan, is going to ship next year, according to reports. Android XR is, in some ways, a culmination of bets Google has been making in AI, the broader Android ecosystem, and the wearable future of technology. Will anyone put them on, or will it be a real test?

The time is right for XR, and so the internet titan wants everyone to know. Adding Gemini enables multimodal AI and natural language — things it says will make interactions with your environment richer. In a demo, Google had me prompt Gemini to name the title of a yellow book sitting behind me on a shelf. I’d briefly glanced at it earlier but hadn’t taken a photo. Gemini took a second, and then offered up an answer. It was correct, I whipped around to check it.

Source: I saw Google’s plan to put Android on your face

Moohan vs. Prototype Smart Glasses: The Key to Generative AI in Immersive Reality and the Fourth Industrial Revolution

Samsung’s headset feels like a mix between a Meta Quest 3 and the Vision Pro. The light seal is optional so you can let the world get in the way. It’s lightweight and doesn’t pinch my face too tightly. I don’t have to change my hair because my ponytail easily slots through the top. At first, the resolution doesn’t feel quite as sharp as the Vision Pro — until the headset automatically calibrates to my pupillary distance.

At this point, I start to feel like myself again. The process of pinching and tapping the side to bring up the app is explained to me. There’s an eye calibration process that feels awfully similar to the Vision Pro’s. If I want, I can retreat into an immersive mode to watch YouTube and Google TV on a distant mountain. I can put the apps at different places in the room. I’ve done this all before. This just happens to be Google-flavored.

For the skeptic, it’s easy to scoff at the idea that Gemini, of all things, is what’s going to crack the augmented reality puzzle. GenerativeAI is having some moments right now but not always in a good way. Outside of conferences with tech evangelists, artificial intelligence is often seen with suspicion and derision. But inside the Project Moohan headset or wearing a pair of prototype smart glasses? I was able to find a glimpse of why both of these companies think the killer app is called Gemini.

When I request things, I don’t have to be specific. Usually, I get flustered talking to AI assistants because I have to remember the wake word, clearly phrase my request, and sometimes even specify my preferred app.

In the Moohan headset, I can say, “Take me to JYP Entertainment in Seoul,” and it will automatically open Google Maps and show me that building. If my windows get cluttered, I can ask it to reorganize them. I don’t have to hold the finger up. While wearing the prototype glasses, I watch and listen as Gemini summarizes a long, rambling text message to the main point: can you buy lemon, ginger, and olive oil from the store? I was able to naturally switch from speaking in English to asking in Japanese what the weather is in New York — and get the answer in spoken and written Japanese.

It’s not just interactions with Gemini that linger in my mind, either. Experiences can be built on top of them. I saw turn by turn text directions when I asked the person how to get somewhere. When I looked at the text, it became a map. It’s very easy to imagine myself using something like that in real life.

Headsets can be hard to sell to the average person. The glasses demo has no concrete timelines, I am more enamored with it. (Google made the prototypes, but it’s focusing on working with other partners to bring hardware to market.) The culture has to be established with either form factor. Outside of Gemini, there has to be a robust ecosystem of apps and experiences for the average person, not just early adopters.

Listening to Kim and Izadi talk, I want to believe. I am aware of the fact that my experiences were tightly controlled. I was not given the freedom to try and break things. I could not take photos of the headset or glasses. All the time, I was steered through preapproved demos that were pretty sure that they’d work. I can’t believe that we can play with these things without some kind of protection.

Even if I knew that, I could not deny that I felt like Tony Stark with Gemini as my Jarvis for an hour. For better or worse, this example has molded so much of our expectations for how XR and AI assistants should work. I have tried lots of headsets and smart glasses, but they all just didn’t work. This was the first time I’d experienced something that was close.

The choice of the OS term is interesting. There are a million terms and abbreviations for this space, all of which mean different things to different people. XR is probably the broadest of the terms, which seems to be why Google picked it. “When we say extended reality, we mean a whole spectrum of experiences from virtual reality to augmented reality and everything in between.”