The Wall Street Journal Sentiment to the Public Concerns about Social Media Content Feeding by Meta Chief Mark Zuckerberg and the Loss of Trust

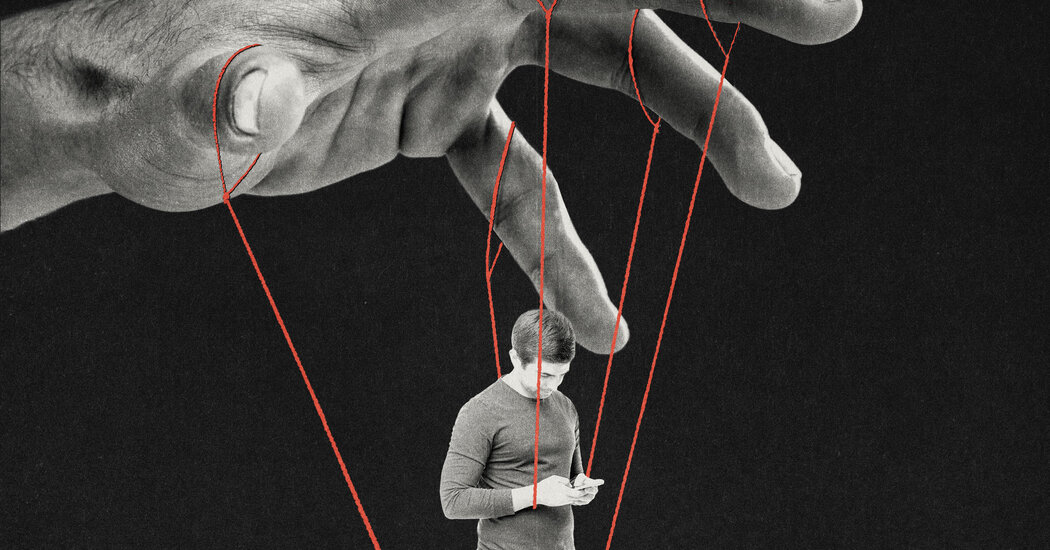

During a recent rebranding tour, sporting Gen Z-approved tousled hair, streetwear and a gold chain, the Meta chief Mark Zuckerberg let the truth slip: Consumers no longer control their social-media feeds. Meta’s algorithm, he boasted, has improved to the point that it is showing users “a lot of stuff” not posted by people they had connected with and he sees a future in which feeds show you “content that’s generated by an A.I. system.”

Things got a bit darker as the day went on. Not everything Uncle Bob shared was accurate, and the platforms’ algorithms prioritized outrageous, provocative content from anyone with internet access over more neutral, fact-based reporting. The lawyers of the tech companies continued to argue that they were not responsible for the content that was uploaded to their platforms.

Let’s get started with the problem. Section 230, a snippet of law embedded in the 1996 Communications Decency Act, was initially intended to protect tech companies from defamation claims related to posts made by users. That protection made sense in the early days of social media, when we largely chose the content we saw, based on whom we “friended” on sites such as Facebook. Since we selected those relationships, it was relatively easy for the companies to argue they should not be blamed if your Uncle Bob insulted your strawberry pie on Instagram.

According to the lawsuit, mixing these made up paragraphs with actual reporting and then giving them to the Post could potentially confuse readers. “Perplexity’s hallucinations, passed off as authentic news and news-related content from reliable sources (using Plaintiffs’ trademarks), damage the value of Plaintiffs’ trademarks by injecting uncertainty and distrust into the newsgathering and publishing process, while also causing harm to the news-consuming public,” the complaint states.

The New York Post and the Wall Street Journal are both owned by News Corp, which filed the lawsuit in the US Southern District of New York.

A WIRED investigation from this summer, cited in this lawsuit, detailed how Perplexity inaccurately summarized WIRED stories, including one instance in which it falsely claimed that WIRED had reported on a California-based police officer committing a crime he did not commit. The WSJ reported earlier today that Perplexity is seeking to raise $500 million is its next funding round, at an $8 billion valuation.

The New York Post shows examples of Perplexity, which is a term used for fake sections of news stories. In AI terms, hallucination is when generative models produce false or wholly fabricated material and present it as fact.

In one case cited, Perplexity Pro first regurgitated, word for word, two paragraphs from a New York Post story about US Senator Jim Jordan sparring with European Union Commissioner Thierry Breton over Elon Musk and X, but then followed them up with generated five paragraphs about free speech and online regulation that were not in the real article.