EU Provisional AI Regulation and Privacy Policies for Artificial Intelligence Companies: The Aharonov-Bohm Analysis of the OneT Project

The European Union’s three branches provisionally agreed on its landmark AI regulation, paving the way for the economic bloc to prohibit certain uses of the technology and demand transparency from providers. Despite warnings from world leaders, the changes that would be required from Artificial intelligence companies are still not known.

“In the end, we got some very minimal transparency obligations for GPAI systems, with some additional requirements for so-called ‘high-impact’ GPAI systems that pose a ‘systemic risk’,” says Leufer — but there’s still a “long battle ahead to ensure that the oversight and enforcement of such measures works properly.”

In fact, it seems a sizable amount of the actual legislation remained unsettled even days before the provisional deal was made. At a meeting between the European communications and transport ministers on December 5th, German Digital Minister Volker Wissing said that “the AI regulation as a whole is not quite mature yet.”

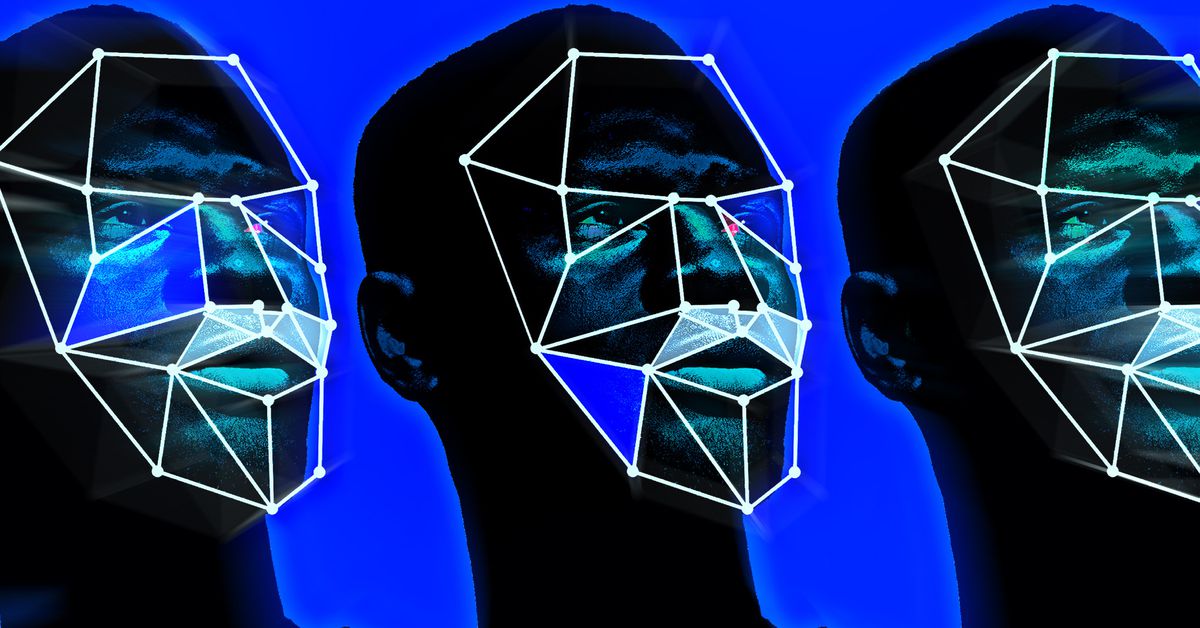

The European Parliament passed a ban on mass public collection of irises and fingerprints. That included creating facial recognition databases by indiscriminately scraping data from social media or CCTV footage; predictive policing systems based on location and past behavior; and biometric categorization based on sensitive characteristics like ethnicity, religion, race, gender, citizenship, and political affiliation. It banned both retroactive and real-time remote identification, and the only exception was to allow law enforcement to use delayed recognition systems to prosecute serious crimes after judicial approval. The European Commission and EU member states got concessions to get them to fight it.

Observers believe the biggest impact would be on American policymakers to move faster. In July, China passed guidelines for businesses that want to sell artificial intelligence services to the public. But the EU’s relatively transparent and heavily debated development process has given the AI industry a sense of what to expect. While the AI Act may still change, Aaronson said it at least shows that the EU has listened and responded to public concerns around the technology.

Lothar Determann, data privacy and information technology partner at law firm Baker McKenzie, says the fact that it builds on existing data rules could also encourage governments to take stock of what regulations they have in place. More mature artificial intelligence companies have privacy protection policies in place that are in line with the new European law, according to the OneTrust chief strategy officer. If the company’s strategy is already in place, the AI Act will be an additional sprinkle.

Navrina Singh is a member of the national Artificial Intelligence Advisory Committee, and she believes that there is still a lot of work to be done.

This doesn’t mean the US will follow the same approach, but it might expand data transparency rules or allow more discretion in model selection.

The AI Act also won’t apply its potentially stiff fines to open-source developers, researchers, and smaller companies working further down the value chain — a decision that’s been lauded by open-source developers in the field. GitHub chief legal officer Shelley McKinley said it is “a positive development for open innovation and developers working to help solve some of society’s most pressing problems.” GitHub, a popular open-source development hub, is owned by Microsoft.

There are lots of grey areas around the use of copyrighted data in model training as the Act hasn’t clarified how companies should treat it. The incentive for avoiding using copyrighted data is meaningless.

It is estimated that OpenAI, Microsoft, Google, and Meta will fight for their dominance, particularly in the US, as they navigate regulatory uncertainty.

Does the data transparency standard change the behavior of companies around data?” by Ariel Aaronson, a digital trade and data governance expert at George Washington University

“Under the rules, companies may have to provide a transparency summary or data nutrition labels,” says Susan Ariel Aaronson, director of the Digital Trade and Data Governance Hub and a research professor of international affairs at George Washington University. “But it’s not really going to change the behavior of companies around data.”