The End of the Darkness: Artificial Intelligence Embedded in the History of Science, Technology, and the First Birthday of ChatGPT

Science fiction, for decades, has been about predicting the future—and warning against it. The metaverse was not envisioned by Star Trek, but it was warned by Neal Stephenson in the movie Snow Crash.

As artificial intelligence has entered every corner of life, it has been easy to observe the lessons sci-fi tried to teach. Data was a bot on Star Trek: The Next Generation who worked with organic beings. A Space Odyssey goes all out to save its own life.

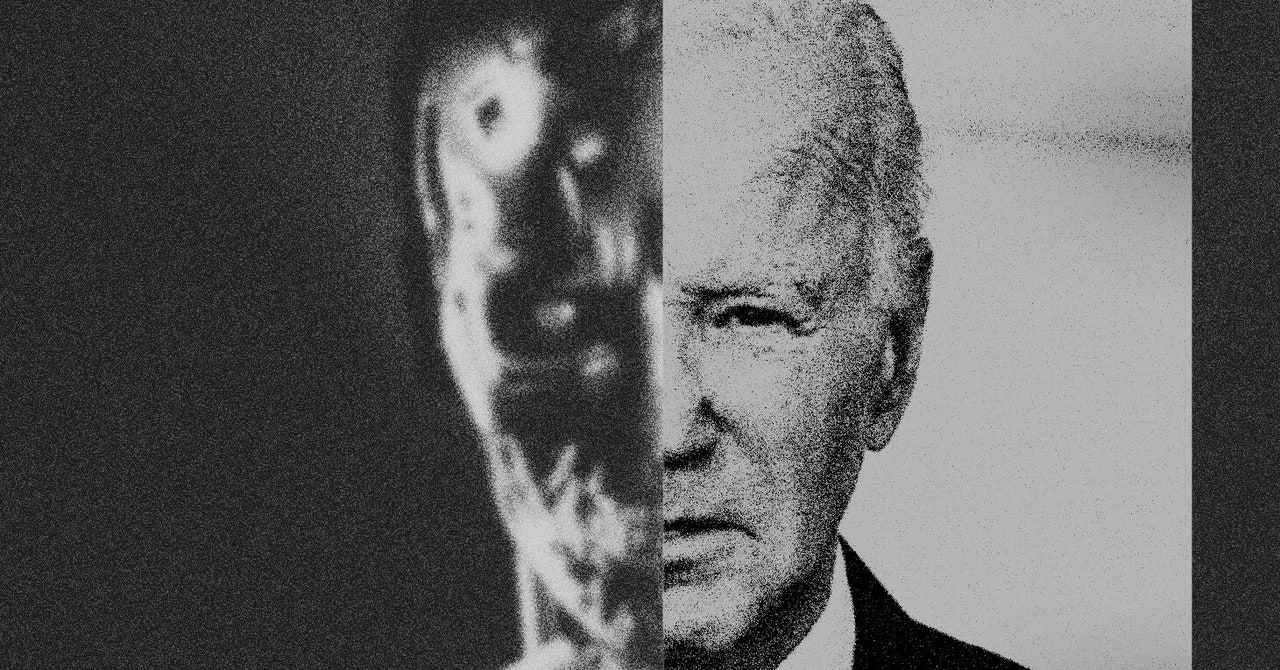

Earlier this week, US President Joe Biden signed a massive executive order outlining policies on the use and development of AI going forward. Applying the thinking of both AI doomers and Trekkies, it aims to encourage AI’s greatest minds to work for the government and includes stipulations to keep the technology from becoming a national security threat.

There’s a reason WIRED called Dead Reckoning the “perfect AI panic movie.” In it, an AI known as The Entity becomes fully sentient and threatens to use its all-knowing intelligence to control military superpowers all over the world. It is, as Marah Eakin wrote for WIRED earlier this year, the ideal “paranoia litmus test”—when It’s the thing people are most afraid of right now, it’s the level of Big Bad in a summer blockbuster. The Entity seems horrifying for someone like Biden, who is aware of the brinkmanship going on around the world. It also begs the question: Did no one watch The Terminator?

As ChatGPT’s first birthday approaches, presents are rolling in for the large language model that rocked the world. From President Joe Biden comes an oversized “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” And UK prime minister Rishi Sunak threw a party with a cool extinction-of-the-human-race theme, wrapped up with a 28-country agreement (counting the EU as a single country) promising international cooperation to develop AI responsibly. Happy birthday!

Before anyone gets too excited, let’s remember that it has been over half a century since credible studies predicted disastrous climate change. Now that the water is literally lapping at our feet and heat is making whole chunks of civilization uninhabitable, the international order has hardly made a dent in the gigatons of fossil fuel carbon dioxide spewing into the atmosphere. The United States has just installed a climate denier as the second in line to the presidency. Will AI regulation progress any better?

Among the things the document lacks are a firm legal backing for all the regulations and mandates that may result from the plan: Executive orders are often overturned by the courts or superseded by Congress, which is contemplating its own AI regulation. (Although, don’t hold your breath, as a government shutdown looms.) And many of Biden’s solutions depend on self-regulation by the industry that’s under examination—whose big powers had substantial input into the initiative.

These efforts are meaningful for a branch of science that relies heavily on expensive computing infrastructure, says policy researcher Helen Toner at Georgetown University’s Center for Security and Emerging Technology in Washington DC. “A major trend in the last five years of AI research is that you can get better performance from AI systems just by scaling them up. She says that it is expensive.

Bengio says that training a frontier artificial intelligence system costs hundreds of millions of dollars. “In academia, this is currently impossible.” The research resources aim to give the public access to these capabilities.

Bengio says it’s a good thing. “Right now, all of the capabilities to work with these systems is in the hands of companies that want to make money from them. We need academics and government-funded organizations that are really working to protect the public to be able to understand these systems better.”

The executive order instructs agencies that fund life-sciences research to establish standards for protecting against using Artificial Intelligence to engineer dangerous biological materials.

Agencies are asked to help skilled immigrants study and work in the United States. And the National Science Foundation (NSF) must fund and launch at least one regional innovation engine that prioritizes AI-related work, and in the next 1.5 years establish at least four national AI research institutes, on top of the 25 currently funded.

The National Artificial intelligence Research Resource will be launched within 90 days, according to Biden’s order. There is a fair amount of excitement about this.

Plans for the UKAIRR were announced in March. At the summit, the government said it would triple the AIRR funding pot to $300 million in order to transform UK computing capacity. The UK investment is larger than the US proposal because of its population and GDP.

The plan is backed by two new supercomputers: Dawn in Cambridge, which aims to be running in the next two months; and the Isambard-AI cluster in Bristol, which is expected to come online next summer.

Bengio: What next year will we know about pharma and the pharma sector in the 21st century? An update from Bengio

“We are on a trajectory to build systems that are extremely useful and potentially dangerous,” he says. “We already ask pharma to spend a huge chunk of their money to prove that their drugs aren’t toxic. We should do the same.”

Yoshua Bengio, an artificial intelligence pioneer and the director of the Quebec AI Institute, says that there are some things that are going to come out next year.