What We Expect to Learn from Google I/O 2024: Live Blogs and Commentary on YouTube and Google Streaming Features (Extended Version)

We have a few reporters at I/O in Mountain View, California, including senior writers Lauren Goode and Paresh Dave, senior reviews editor Julian Chokkattu, and staff writer Reece Rogers. Along with senior writer Will Knight and editorial director Michael Calore (who will be watching from their desks, remotely), the team will provide live updates and commentary once we’re underway.

A presentation by the search engine will start at 10 am Pacific and 1 pm Eastern. We will start the live post at 9:30 am Pacific and 12:30 pm Eastern. This text will disappear and will be replaced by the feed of live updates, just like magic.

You may be wondering why we are publishing a live blogged when the show is also being streamed on YouTube. The short answer is easy: We love live blogs! The I/O keynote is a two-hour advertisement for all things Microsoft, and that’s what it is. The content on your screen won’t include a lot of the necessary context surrounding it, as we expect Google to break some news in this keynote, but instead will be missing a lot of relevant context around it. That’s what the live blog is for, to distill and analyze the news coming from the stage and to give you the context that helps you become better informed. LiveBlogging is fun for us to do. We can have this, okay?

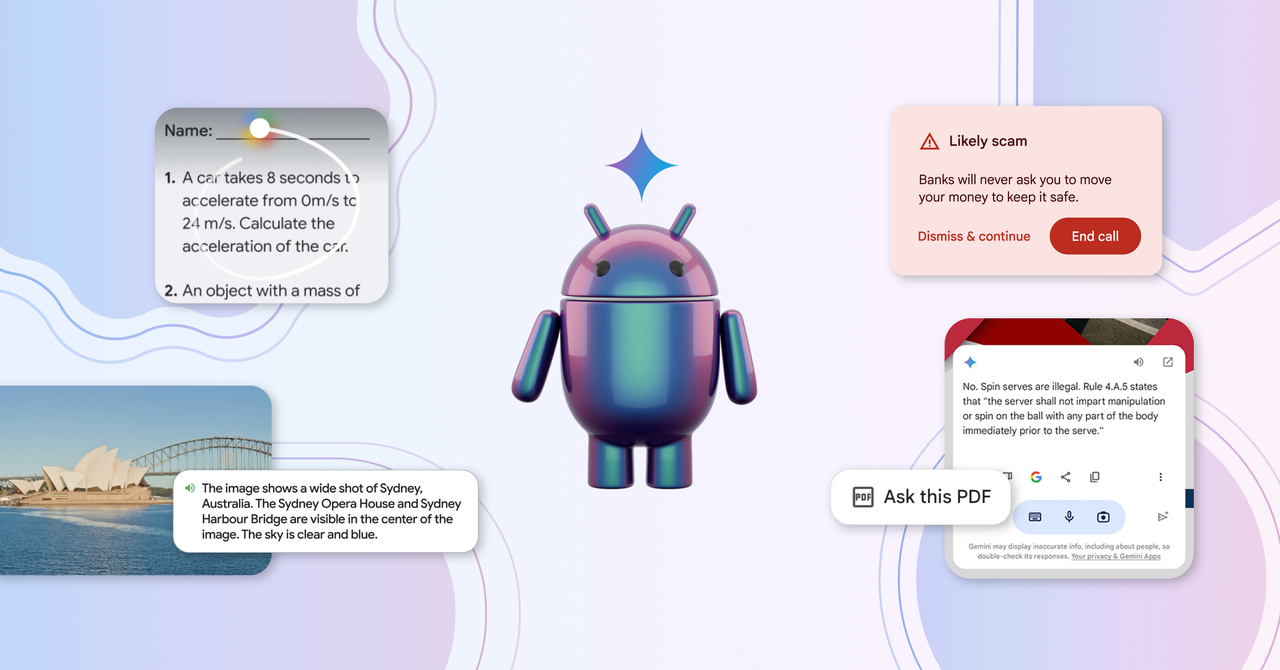

Today, at Google’s I/O developer conference in Mountain View, California, the new features Google is touting in its Android operating system feel like the Now on Tap of old—allowing you to harness contextual information around you to make using your phone a bit easier. Except this time, these features are powered by a decade’s worth of advancements in large language models.

You can read our full rundown of what to expect from Google I/O 2024. We’ll see you back here at 9:30 Pacific for our commentary. The live event starts half an hour later.

Circle to Search: an AI-powered assistant to search for clues, graphs, and physics on the phone (a decade after Google)

A decade ago, Google showed of a feature called Now on Tap that could be used if you held the home button and pressed the function to see contextual information on the screen. Are you talking about a movie with a friend? The title could be got on tap without leaving the messaging app. Looking at a restaurant in Yelp? The phone could surface OpenTable recommendations with just a tap.

I was fresh out of college, and these improvements felt exciting and magical—its ability to understand what was on the screen and predict the actions you might want to take felt future-facing. One of my favorite features was it. It slowly morphed into Google Assistant, which was great in its own right, but not quite the same.

Circle to Search has received student feedback and it was the reason for their latest feature. Circle to Search can be used to find the answers to physics and math problems if a user circles them, and if the user leaves the syllabus app.

It is clear that Gemini was giving students ways to solve the problems, rather than just providing answers. Later this year, Circle to Search will be able to solve more complex problems like diagrams and graphs. This is all powered by Google’s LearnLM models, which are fine-tuned for education.

The way to look at it is that it’s an opt-in experience on the phone. Over the years, I think they have become more advanced and evolving. We don’t have anything to announce today, but there is a choice for consumers if they want to opt into this new AI-powered assistant. People are trying out the new product and we are seeing it. and we’re getting a lot of great feedback.”

Project Astra: Asking for Photos of the Best Place to See the Northern Lights with Google’s Artificial Intelligence (Ask Photos)

The index cards that were thrown into the air were used for the screenplay that was written by the company for the past 25 years. Also: The screenplay was written by AI.

The changes to search have been in the works for a long time. Last year the company carved out a section of its Search Labs, which lets users try experimental new features, for something called Search Generative Experience. It was unclear whether or not the features would be a part of the search engine permanently. The answer is, well, now.

Google says it has made a customized version of its Gemini AI model for these new Search features, though it declined to share any information about the size of this model, its speeds, or the guardrails it has put in place around the technology.

In response to a question about where is the best place to see the northern lights, an example was provided by WIRED. Google will, instead of listing web pages, tell you in authoritative text that the best places to see the northern lights, aka the aurora borealis, are in the Arctic Circle in places with minimal light pollution. It will also offer a link to NordicVisitor.com. But then the AI continues yapping on below that, saying “Other places to see the northern lights include Russia and Canada’s northwest territories.”

With the new ability to search with a video, you can find something even if it is not based on images. That means you can take a video of something you want to search for, ask a question during the video, and Google’s AI will attempt to pull up relevant answers from the web.

There is a new feature coming from the internet giant this summer that could benefit just about anyone who has been taking photos for a while. “Ask Photos” lets Gemini pore over your Google Photos library in response to your questions, and the feature goes beyond just pulling up pictures of dogs and cats. Pichai asked what his license plate number was. He followed the number with a picture to make sure it was correct.

With Project Astra, the company hopes to become a virtual assistant that can look at what your device sees, remember where you are, and do things for you. It is powered by many of the most impressive demos from I/O this year, and the company’s goal is to be an honest-to-goodness agent that can’t just talk to you, but do things on your behalf.

Google’s answer to OpenAI’s Sora is a new generative AI model that can output 1080p video based on text, image, and video-based prompts. The videos can be made in a number of styles and can be altered with more prompts. The company is already offering Veo to some creators for use in YouTube videos but is also pitching it to Hollywood for use in films.

Gemini Nano: A Simulated Chatbot for Android and its Application to Video Detection and Sense-based Content Generating

Google is rolling out a custom chatbot creator called Gems. Similar to Openai’s GPTs, Gems allows users to give instructions to the company, what it does and how it responds. If you are a Gemini Advanced subscriber, you will be able to have a positive running coach with daily motivation and running plans soon.

If you are on anANDROID phone or tablets, there is a chance that you will be able to solve a math problem on your screen. Google’s AI won’t solve the problem for you — so it won’t help students cheat on their homework — but it will break it down into steps that should make it easier to complete.

Using on-device Gemini Nano AI smarts, Google says Android phones will be able to help you avoid scam calls by looking out for red flags, like common scammer conversation patterns, and then popping up real-time warnings like the one above. Later this year the company will give more details on the feature.

Users will soon be able to ask questions about the videos on-screen and it will answer based on the automatic caption. For paid Gemini Advanced users, it can also ingest PDFs and offer information. Those and other multimodal updates for Gemini on Android are coming over the next few months.

Google announced that it’s adding Gemini Nano, the lightweight version of its Gemini model, to Chrome on desktop. You will be able to use the built-in assistant on your device to generate texts for products and social media posts from within the Chrome browser.

Google says it’s expanding what SynthID can do — the company says it will embed watermarking into content created with its new Veo video generator and that it can now also detect AI-generated videos.